Test changes with statistical significance

A/B tests, multivariate tests, and robust targeting & exclusion rules. Analyze usage with product analytics and session replay.

boosted engagement by 40%

"Y Combinator uses PostHog's experimentation suite to try new ideas, some of which have led to significant improvements."

increased registrations by 30%

"This experiment cuts that in half to a 30% drop-off – a 50% improvement without a single user complaining!"

unthrottled event ingestion from a previous analytics provider, leading to better insights

"PostHog, which can do both experiments and analytics in one, was clearly the winner."

Features

![]()

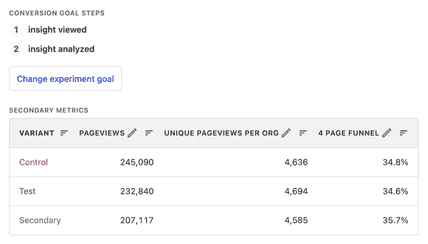

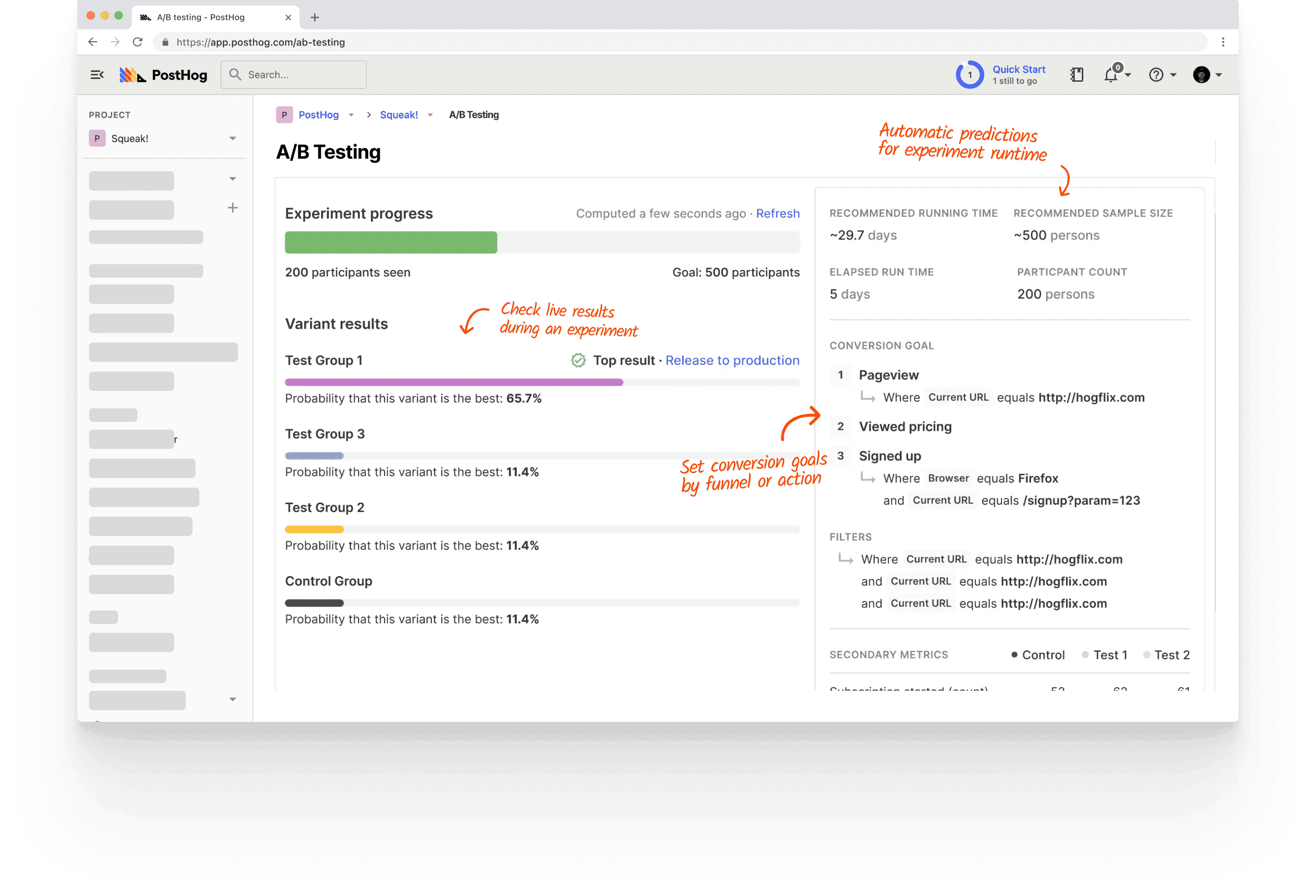

Customizable goals

Conversion funnels or trends, secondary metrics, and range for statistical significance

![]()

Targeting & exclusion rules

Set criteria for user location, user property, cohort, or group

![]()

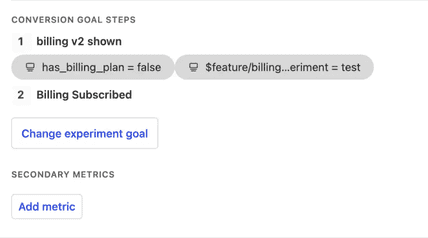

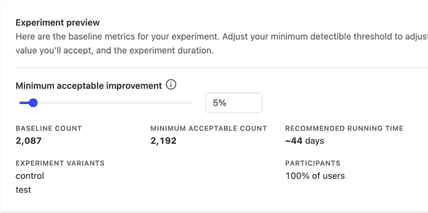

Recommendations

Automatic suggestions for duration, sample size, and confidence threshold in a winning variant

Built on Feature Flags

All the benefits of feature flags with added functionality around stat-sig experimentsJSON payloads

Modify website content per-variant without additional deploymentsSplit testing

Automatically split traffic between variantsMultivariate testing

Test up to 9 variants against a controlDynamic cohort support

Add new users to an experiment automatically by setting a user property

Answer all of these questions (and more) with PostHog A/B testing.

- Does this new onboarding flow increase conversion?

- How does this affect adoption in Europe?

- Will enterprise customers like this new feature?

Usage-based pricing

Use A/B testing free. Or enter a credit card for advanced features.

Either way, your first 1,000,000 requests are free – every month.

Free

No credit card required

All other plans

All features, no limitations

Requests

1,000,000/mo

Unlimited

Features

Boolean feature flags

Multivariate feature flags & experiments

Persist flags across authentication

Test changes without code

Multiple release conditions

Release condition overrides

Flag targeting by groups

Local evaluation & bootstrapping

Flag usage stats

A/B testing

Funnel & trend experiments

Secondary experiment metrics

Statistical analysis

Group experiments

Multi-environment support

Data retention

1 year

7 years

Monthly pricing

First 1 million requests

Free

Free

1-2 million

-

$0.000100

2-10 million

-

$0.000045

10-50 million

-

$0.000025

50 million+

-

$0.000010

FAQs

PostHog vs...

So, what's best for you?

Reasons a competitor might be better for you (for now...)

- No-code experiments or CMS capabilities

- You'll still need a designer/engineer to create experiments

- No integration with Google Ads

- PostHog can't run ad experiments, or target users into an experiment based on an ad variant engagement.

Reasons to choose

- Integration with other PostHog products

- Attach surveys to experiments or view replays for a test group. Analyze results beyond your initial hypothesis or goal metric.

- Automated recommendations for sample sizes and runtime

- Automatic significance calculator – to help you figure out the winning variant as quickly as possible

- Robust targeting and exclusion options, including cohorts and location

- Anything you monitor in analytics, you can target in an experiment

Have questions about PostHog?

Ask the community or book a demo.

Featured tutorials

Visit the tutorials section for more.

Running experiments on new users

Optimizing the initial experience of new users is critical for turning them into existing users. Products have a limited amount of time and attention from new users before they leave and churn.

How to set up A/B/n testing

A/B/n testing is like an A/B test where you compare multiple (n) variants instead of just two. It can be especially useful for small but impactful changes where many options are available like copy, styles, or pages.

How to run holdout testing

Holdout testing is a type of A/B testing that measures the long term effects of product changes. In holdout testing, a small group of users is not shown your changes for a long period of time, typically weeks or months after your experiment ends.

How to do A/A testing

An A/A test is the same as an A/B test except both groups receive the same code or components. Teams run A/A tests to ensure their A/B test service, functionality, and implementation work as expected and provides accurate results.

Install & customize

Here are some ways you can fine tune how you implement A/B testing.

Explore the docs

Get a more technical overview of how everything works in our docs.

Meet the team

PostHog works in small teams. The Feature Success team is responsible for building A/B testing.

(Shockingly, this team prefers their pizza without pineapple.)

Roadmap & changelog

Here’s what the team is up to.

Latest update

Feb 2024

Graphs and significance calculation added for secondary metrics

You've been able to add secondary metrics to A/B experiments in PostHog for a while, but we've now added much better reporting around them.

The new graphs and significance calculations will help you determine if there are knock-on effects to your experiments away from the primary metric.

For example, you may test a change to your sign-up flow to improve conversion as a primary metric, but you may also need to know about impact to activation as a secondary metric. Now you can understand both easily!

Up next

Feature Success Analysis

Bringing together different parts of PostHog (flags, replay, surveys) to allow users to better analyse the success of a new feature.

Progress

Milestones

Project updates

No updates yet. Engineers are currently hard at work, so check back soon!

Users & recordings linked to feature flags

We want to make it easier for those who use feature flags to get information on users attached to a particular feature flag, and gather more information on those users' experience through session recordings.

Progress

Milestones

Project updates

No updates yet. Engineers are currently hard at work, so check back soon!

Questions?

See more questions (or ask your own!) in our community forums.

- Question / TopicRepliesCreated

Pairs with...

PostHog products are natively designed to be interoperable using Product OS.

Product analytics

Run analysis based on the value of a test, or build a cohort of users from a test variant

Session replay

Watch recordings of users in a variant to discover nuances in why they did or didn’t complete the goal

Feature flags

Make changes to the feature flag the experiment uses - including JSON payload for each variant

This is the call to action.

If nothing else has sold you on PostHog, hopefully these classic marketing tactics will.

PostHog Cloud

Digital download*

Notendorsed

by Kim K

*PostHog is a web product and cannot be installed by CD.

We did once send some customers a floppy disk but it was a Rickroll.